Opportunities for mobile security with AI & Agents

AI is everywhere. Over the last year, we have seen companies release powerful models, build autonomous agents, hype up AGI, etc. There are now agents for almost anything. Coding agents, SOC assistants, vulnerability scanners, etc. I have been documenting some of my observations and trying out these tools. This post briefly discusses some of the opportunities LLM models could bring to the mobile security world

In this post, I wanted to explore four areas

- Obfuscation

- Device Forensics

- Pentesting (Security Testing)

- SOC Automation

Obfuscation

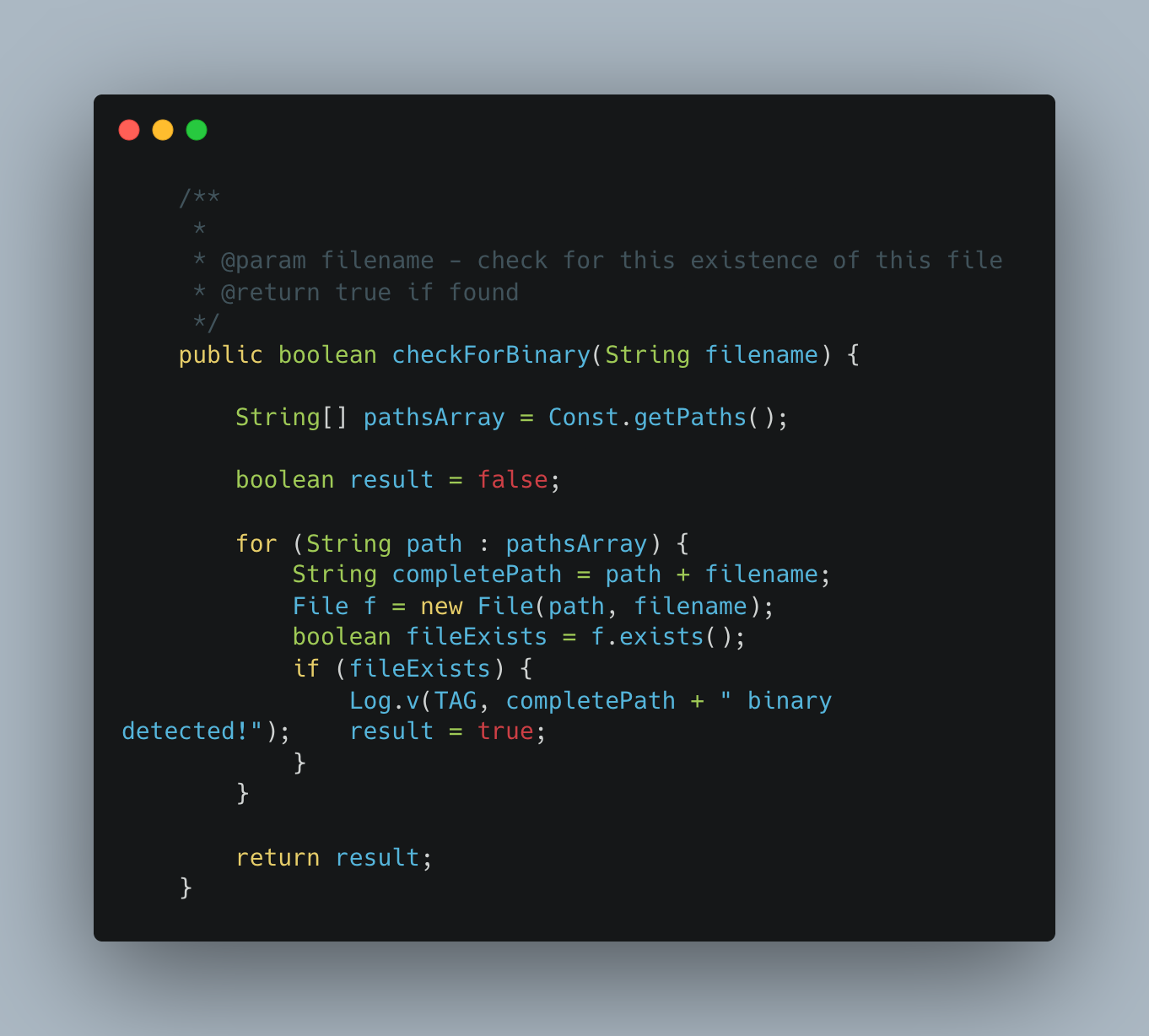

One good use of LLMs is code obfuscation. In mobile apps, code must be protected from reverse engineering. There are numerous obfuscation techniques available. E.g. Control flow obfuscation works by injecting dummy code that throws off an attacker by making it difficult to read and understand the code.

See the screenshot below. On the left is unobfuscated root detection code taken from this open-source root detection library. On the right is code obfuscated using Claude Sonnet 4. As with LLM models, there is a risk of the model hallucinating; therefore, this approach should be applied with caution or in a codebase that has solid unit tests. With the right prompts, the model can also do string and name obfuscation for enhanced protection.

This is the Claude prompt I used to generate the obfuscated code below. https://claude.ai/share/1562ad16-695b-4748-90d3-47c2c91d2046

Control Flow Code Obfuscation • Left side code is unobfuscated • Right side code is obfuscated

Device Forensics

There are many cases where device forensics reveal critical information about how a threat unfolded. Google & Apple have designed their operating systems to be closed to apps and even to the user. Unlike PCs, users are not allowed to view/touch/edit system settings. All sensitive operations are mediated by the OS. This is why it's increasingly difficult for endpoint vendors to scan devices and provide solid protection. Last year, a faulty update from Crowdstrike took down the internet. Flights were delayed, banks can't transfer funds, email disrupted, etc. This was possible because AV vendors have access to Windows's kernel. This kind of stuff is IMPOSSIBLE on mobile. This dynamic is the basis for many mobile forensics companies like Zecops. The process involves plugging the device into your PC/Server to look for traces of compromise. Having physical access to the device gives access to the operating system that would otherwise not be possible from app or device management. Forensics is not easy. It can get messy. I have written about it before, https://ismyapppwned.com/2024/08/01/ios_device_forensics_sysdiagnose/

The video recording below shows how one can analyse an iOS device by simply asking Claude

Device Forensics Analysis using Claude

Pentesting

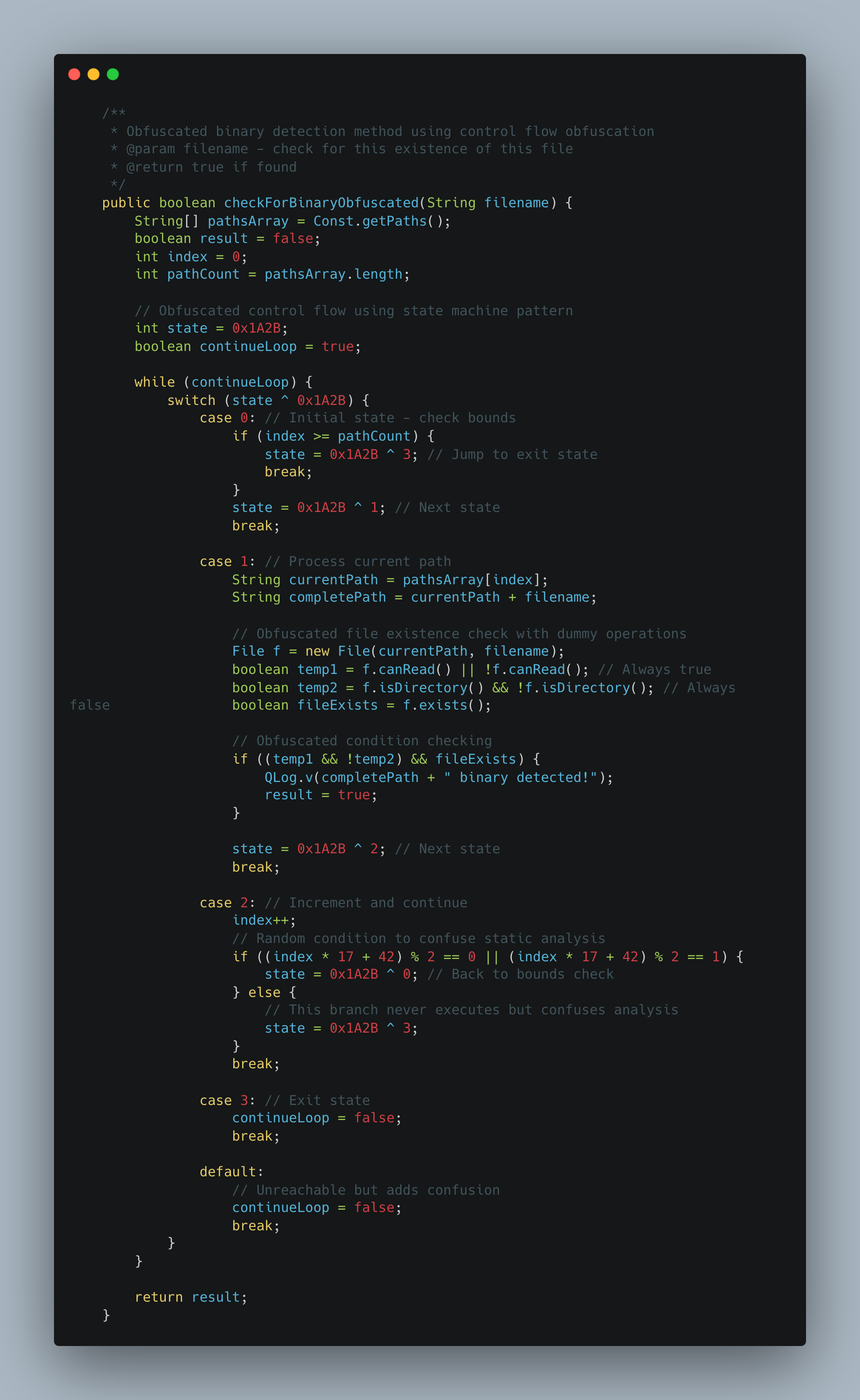

This is one of the areas where LLMs shine. I have been reverse-engineering apps since 2012. Malwares, or trying to figure out how certain apps work (peek into competitor apps 😄), or debugging production issues, etc. Detonating an app and reading obfuscated code is complex. You have to keep track of method calls, how they are invoked, understand vulnerabilities, look for loopholes, etc. Recently, Laurie released a very helpful MCP server that can help security engineers with this process. MCP is a protocol released by Anthropic to connect LLM clients ( ChatGPT desktop app) with a specialized server that will undertake deeper analysis

Before, a pentester used to drive this process. Manually going through files, picking interesting files, categorizing, and analyzing. This process is iterative & laborious. Now, LLM drives the process through a specialized server that has access to different security tools

Reverse Engineering with Claude

Testing & Quality Assurance

Tencent recently published a paper titled “AppAgent: Multimodal Agents as Smartphone User” that uses LLM model to intelligently navigate mobile UI screens and perform various actions. This includes filling forms, intelligence (human-like) navigation, taking actions like sending an email, watching a video, etc. It works by taking a screenshot of a given UI screen and understand the different components (Text fields, buttons, etc) displayed. Once completed, it takes actions (tap, swipe, etc)

A few years back, I was trying to analyze an application to scan apps for security vulnerabilities dynamically. This required an agent to drive the application autonomously using dummy tools like monkey 🤦 but a new era is upon us. See the video below

Automated Testing

SOC Automation

This is one of the glaring use cases for LLMs. Most SOC engineers spend hours writing reports, RCA memos, analyzing incidents, graphing timelines, and providing summaries to engineers. One company that jumped on this opportunity is App Dome. They aim to help end users understand threats and guide them in resolving issues on their devices. Most consumers are not technical and don't understand what "tamper" means or when apps claim that their device is not "secure". Models can be instructed to explain complex threat scenarios using simple prompts like "Explain it to me like I'm 5."

Summary

Large Language Models are transforming mobile security across multiple domains. Key applications include automated code obfuscation & deobfuscation, AI-powered penetration testing that streamlines the traditionally manual process of app analysis, and intelligent mobile UI testing that can navigate screens and perform actions like a human user. Additional opportunities include device forensics analysis, where LLMs can interpret complex system data, and SOC automation that helps security teams generate reports and explain threats to non-technical users in simple terms.

Sandbox Brief Newsletter

Join the newsletter to receive the latest updates in your inbox.